In the dynamic digital marketing landscape, one crucial term often emerging is SEO, which stands for Search Engine Optimization. While many individuals and businesses have a basic understanding of SEO, it’s essential to delve deeper into its components. Technical SEO is particularly important. It is the backbone for a website’s ability to achieve high rankings on search engines like Google, Bing, and others.

Technical SEO differs from traditional SEO. It does not primarily focus on content creation, link building, or keyword optimization. However, those elements are still essential. Instead, technical SEO emphasizes the underlying infrastructure of a website. This foundation helps search engines crawl, index, and understand the site’s content. This includes optimizing website speed, ensuring mobile-friendliness, implementing secure protocols (like HTTPS), creating an XML sitemap, and ensuring that robots.txt files are correctly configured to guide search engines.

Web admins can significantly enhance a site’s visibility in search results by mastering these technical aspects. This mastery also improves user experience. Additionally, it increases organic traffic. This empowerment through knowledge of technical SEO is not just beneficial. It’s essential for any successful digital marketing strategy. It directly influences how well a website performs in the competitive online marketplace.

In this blog, we’ll dive into the key aspects of technical SEO. It’s essential for improving your website’s performance. Technical SEO also enhances your site’s visibility.

1. Website Crawlability

Before a search engine can assign a rank to your website, it must first crawl and index it. Crawling is how search engine bots, often called spiders or crawlers, systematically navigate your website to discover its content. This involves following links from one page to another and reading the information available on each page.

Crawlability defines how easily these bots can access your website’s structure and content. Factors influencing crawlability include your site’s architecture, a robots.txt file (which can allow or disallow access to specific pages), and internal linking practices. If your website is not designed well for effective crawling, the search engine can struggle. It has difficulty indexing your pages. If pages are not indexed, they will not appear in search engine results, significantly reducing their visibility to potential visitors. Ensuring that your site is easily crawlable is crucial. This enhances its chances of being indexed and ranked appropriately by search engines.

Essential Elements for Crawlability:

- Robots.txt file: This file guides search engine bots on which pages to crawl or avoid. Ensuring your robots.txt file is configured correctly can prevent accidental blocking of essential pages.

- XML Sitemap: An XML sitemap is a blueprint. It tells search engines which website pages, videos, and other content on your site are available for crawling. Submitting an up-to-date sitemap to Google Search Console is crucial for helping search engines find and index your content.

2. Website Speed

Website speed is an essential ranking factor in search engine optimization (SEO), directly impacting user experience and overall site engagement. Both users and search engines favor fast-loading websites because they contribute to a smoother, more enjoyable browsing experience. Studies have shown that Google often leaves a site that takes more than a few seconds to load. This action leads to higher bounce rates. It also results in lower conversion rates.

Google has explicitly stated that page speed plays a role in ranking algorithms. This means that websites that take longer to load may face challenges in achieving high visibility in search results. As a result, slower sites struggle to compete with faster ones. They potentially fall behind in organic search rankings and user acquisitions. For website owners and digital marketers, optimizing site speed should be a priority. It enhances user satisfaction. It also improves the likelihood of better website positions in search engine results pages (SERPs).

How to Improve Website Speed:

- Image optimization improves load times by compressing images and using the right formats like JPEG, PNG, and WebP.

- Minify CSS, JavaScript, and HTML enhances website performance by reducing file sizes through the removal of unnecessary characters. This leads to faster loading times and can improve user experience and SEO rankings.

- Use a Content Delivery Network (CDN) to speed up website performance. It distributes content across global servers. This reduces load times for users. It enhances reliability during traffic spikes and can improve SEO, resulting in a better user experience

3. Mobile-Friendliness

As mobile usage continues to soar, Google now uses a mobile-first indexing approach for websites. This means it prioritizes the mobile version of a website when developing its ranking and indexing within search results. This shift underscores the importance of having a mobile-optimized site. If you neglect this aspect, it significantly decreases organic traffic and search engine rankings. Websites that are not responsive can be at a competitive disadvantage. They may not provide a user-friendly experience on mobile devices. This situation ultimately affects their visibility and performance in search results. Ensuring your website is fully optimized for mobile users is crucial. It is a necessity for maintaining your online presence. Moreover, it enhances your visibility in today’s digital landscape.

Mobile optimization Tips:

- Responsive Design: Ensure your website adapts to different screen sizes and devices.

- Test Mobile Usability: Use tools like Google’s Mobile-Friendly Test to ensure your site provides a seamless mobile experience.

4. Secure Websites (HTTPS)

Google has confirmed that HTTPS is a ranking signal. Websites with HTTPS encryption are considered more secure and vital for user trust and search engine rankings.

How to Secure Your Website:

- Install an SSL certificate (Secure Sockets Layer) to create an encrypted connection between your server and users, protecting sensitive data. This enhances security, boosts SEO, and builds trust. Buy from a Certificate Authority, generate a CSR, and upload the certificate to secure your website.

5. Structured Data (Schema Markup)

Structured data, often called schema markup, is a powerful tool that enhances your website’s visibility to search engines. By adding this specialized markup into your website’s HTML code, you help search engines interpret the content. It also allows them to understand the context of your pages. This process involves labeling elements of your site’s content. These elements include articles, products, events, and reviews. You use specific tags that clearly define their purpose.

Structured data, or schema markup, is a powerful tool that can significantly enhance your website’s visibility to search engines. By adding this specialized markup to your website’s HTML code, you help search engines interpret your pages’ content. They also understand the context of these pages. The primary advantage of using structured data is that it facilitates the creation of rich snippets in search engine results. These rich snippets can display additional information, such as star ratings from customer reviews. They can show product prices, availability, or answers to frequently asked questions (FAQs). By presenting this enriched information, your website stands out in search results. It attracts more user clicks and engagement. This improves the overall user experience.

Benefits of Structured Data:

- Rich Snippets: These enhanced search results with extra details like ratings or images, making your pages stand out. This can increase your click-through rate (CTR) as users are more likely to click on visually appealing links, driving more organic traffic to your site

- Voice Search Optimization is important as voice-activated devices become more common. Using structured data helps search engines understand your content, increasing the likelihood of appearing in voice search results with concise answers

6. Fixing Broken Links and Redirects

Broken links, commonly known as 404 errors, can significantly impact your website’s user experience and search engine optimization (SEO) performance. When users click on a link that leads to a non-existent page, they become frustrated. This can lead to a poor perception of your site’s reliability and professionalism. This negative experience can increase bounce rates as visitors quickly leave your site searching for more functional alternatives.

From an SEO perspective, search engines like Google strive to deliver high-quality, trustworthy content to users. If they find numerous broken links while crawling your site, they will interpret this as a sign of poor maintenance. This perception might decrease your site’s authority. Consequently, your rankings in search results could suffer. Regularly monitor and repair broken links. This practice is essential in maintaining a positive user experience. It also safeguards your website’s standing with search engines.

How to Fix Broken Links:

- Regularly check for 404 errors using tools like Google Search Console or Screaming Frog. These tools help identify and analyze broken links, improving user experience and SEO.

- Set up 301 redirects for moved or deleted pages. This helps to preserve link equity. It also avoids 404 errors. These steps ensure better SEO performance and user experience.

7. Canonicalization

Canonicalization is a crucial aspect of web development and search engine optimization (SEO). It involves designating a preferred version of a web page. This is important when there are duplicates or closely related pages on the site. This process is essential. Search engines, like Google, can encounter difficulties in determining which version of a page should be prioritized in search results.

When multiple pages contain similar content, lacking proper canonical tags can divide ranking signals among these pages. This division can dilute the authority and visibility of each page. It makes it more challenging for any single page to achieve a high ranking. By implementing canonical tags, you provide clear instructions to search engines about which page is the primary. This consolidates ranking power. It ensures that users find the most relevant content. This improves your site’s SEO performance and enhances the user experience by guiding visitors to the intended destination.

How to Handle Duplicate Content:

- Use rel= “canonical” tags to indicate the original content source and manage duplicates.

- Set up proper 301 redirects for unnecessary duplicate content. This will direct users and search engines to the original version. It improves SEO and enhances user experience.

8. Internal Linking Structure

An optimized internal linking structure is crucial in enhancing search engines’ discovery and navigation of new pages on your website. By linking related content within your site strategically, you help search engines understand your content hierarchy. They also grasp the relationship of your content more effectively. This improves the crawlability of your website. It allows search engine bots to find and access all your pages more easily. It also enhances indexability. Indexability is the ability of search engines to include those pages in their search results. As a result, essential pages are prioritized in the search engine’s indexing process. This prioritization ensures they are more likely to be displayed to users. This happens when users make relevant queries. This interconnected framework aids in optimizing your website’s visibility. It allows users to find related content more intuitively. This ultimately improves the overall user experience.

Internal Linking Best Practices:

- Use descriptive anchor text that indicates the content of the linked page. This helps users know what to expect and improves search engine optimization. For instance, instead of “click here,” use specific phrases like “sustainable gardening practices.”

- Linking to high-authority pages improves your content’s value and rankings. It offers resources for your audience and boosts your page’s credibility and visibility in search results.

9. Core Web Vitals

Google has incorporated Core Web Vitals into its search ranking criteria. This change emphasizes the importance of user experience in evaluating website performance. Core Web Vitals consist of three key metrics: loading performance, interactivity, and visual stability.

Improving Core Web Vitals:

- Largest Contentful Paint (LCP) refers to how quickly a webpage displays content. The Largest Contentful Paint (LCP) metric often measures this speed. An optimal LCP score is crucial. Users commonly abandon pages that take too long to load. This behavior directly impacts bounce rates and user satisfaction.

- First Input Delay (FID): This metric is assessed using FID. It measures the time it takes for a page to respond to user interactions. A swift response enhances user engagement. It encourages users to stay on the site longer. This improves overall experience and interaction rates.

- Cumulative Layout Shift (CLS): This aspect pertains to the layout stability of a webpage during loading. It measures layout stability through Cumulative Layout Shift (CLS). It tracks unexpected layout shifts that happen while users read or interact with the content. A stable layout prevents frustrating experiences. For example, users click on a button that suddenly moves. This stability maintains user trust and engagement.

These metrics have become increasingly vital for search engine optimization (SEO). They are crucial for user engagement. These metrics reflect a site’s ability to deliver a smooth and enjoyable browsing experience. Websites that excel in these areas will see improved rankings in search results and better user retention rates.

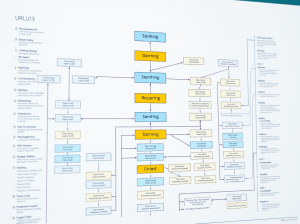

10. Website Architecture and URL Structure

A well-organized website architecture is crucial for enhancing your site’s overall performance. It facilitates easier crawling and indexing by search engines. When your site is structured logically, search engines can navigate the pages more efficiently. This allows them to understand the content and its relevance to user queries. A clean and coherent URL structure also plays a significant role in this process. It aids search engines in understanding the hierarchy and content of your site. It also improves the user experience. A clear structure makes it intuitive for visitors to find the information they are seeking. Clear URLs with relevant keywords help users know what to expect on a page. This increases the likelihood of engagement and reduces bounce rates. Investing time to develop a thoughtful website architecture enhances visibility in search results. It leads to a more satisfying user experience.

Best Practices for Website Architecture:

- Keep URLs short, descriptive, and keyword-rich. They should reflect the page content for clarity and improve SEO, so avoid unnecessary words and characters.

- Use a logical hierarchical structure like domain.com/category/product to organize your website, improving navigation and SEO.

- Ensure that key pages are easily reachable. Users should require no more than a few clicks from the homepage for optimal access.

Final Thoughts on Technical SEO

Technical SEO is often the backbone of a website’s search engine performance. Technical SEO might seem more complex than traditional SEO tactics. However, it ensures that search engines can easily crawl, index, and rank your site’s content. Focus on website crawlability. Ensure your site’s speed and security. Pay attention to its structure. By doing this, you can build a solid foundation for a successful digital marketing strategy.

Technical SEO is an ongoing process. Staying updated with the latest trends and best practices is key to maintaining your website’s visibility in search results. The digital landscape constantly evolves. Staying informed about the latest updates, trends, and best practices is crucial. This ensures preserving and enhancing your website’s visibility in search results. Regular audits and updates can ensure that your site remains optimized for both current algorithms and user expectations.